Everywhere I go, I see massive amounts of product waste: development work delivered on time/on budget that doesn’t drive sales or customer satisfaction or business improvement. Yet somehow we don’t see this waste, or mis-attribute it to engineering process problems.

I’ve written a lot about the organizational and conceptual gap between product/engineering teams and the “go-to-market” or sales/marketing side of tech companies. (See Selling Problems Instead of Philosophy or Prioritization is a Political Problem or Abandoning “MVP”.) At many companies, there’s a deep-rooted lack of understanding about what the other side does — and why they do it. We shout past each other using secret keywords (“agile”) and assuming bad intent.

I think serious product discovery and end user validation may be stuck in this cultural/conceptual gap. Product Management (and Engineering & Design) see continuous customer interviewing and intellectually honest experimentation as this decade’s biggest game-changer: better discovery is how we deliver more value with the same development staff. (See Teresa Torres’s bestselling product book.)

But this is deeply counter-intuitive to non-engineering executives and their departments. They think of software development as a manufacturing process with classic assembly-line success metrics: [a] building exactly what the “go-to-market” team tells Engineering to build, [b] in the order demanded, and [c] done as fast/cheaply as possible. Product Management (if it exists in old-style IT) is tasked with collecting each organization’s shopping list, then providing enough technical specifications that Engineering can build it.

Horribly wasteful and frustrating! At almost all of my clients, I see major product waste: products released on time/on budget that don't deliver positive business outcomes. Dozens of new features that are rarely used and make the underlying products harder to navigate. Beautiful workflows designed for the wrong audience. Local optimizations that reduce total revenue. Single-customer versions that slowly consume our entire R&D organization with one-off single-customer support and upgrades. Acquired products that we thought could easily be integrated but (in fact) need total replatforming to interoperate with our main products. Project teams that ship v1.0 and are then re-assigned elsewhere, leaving orphaned software that is quickly discarded without essential bug fixes or v1.1 features. Commitments to new capabilities that we know can’t work as promised.

Let’s call this “product waste” as distinct from “engineering waste.” Product waste is building the wrong thing. Shipping enhancements that don’t deliver expected business outcomes. Or letting one big customer define our technical architecture. Or changing our SEO strategy based on some outsider’s untested model. Or adding so many fields to our subscription page that signup rates drop. Or signing a deal that’s outside our target market and our competence and our product capabilities. Or enabling Sales to create a new product bundle for each new prospect.

IMO, product waste is a bigger source of lost energy and frustration and bad customer outcomes and engineering staff churn than engineering process waste. Kanban vs. Scrum makes little difference if customers stay away in droves. Better automated testing addresses quality, not market adoption. Building something that’s brilliant but never used belongs in the rubbish bin.

This distinction is essential: I see senior execs push endlessly for more “development throughput” without recognizing that most of their requests deliver zero (or negative) customer value and incremental revenue.

So as a bilingual exec (speaking both tech and go-to-market), I want to reframe this as a discussion about money. Hard currency. I want to move us from development philosophy to why executives and the Board should care about discovery. On the maker side, we often talk qualitatively about psychological safety, discovery techniques, agile methods, architecture, technical debt… but it’s cold hard cash that gets the attention of the business side.

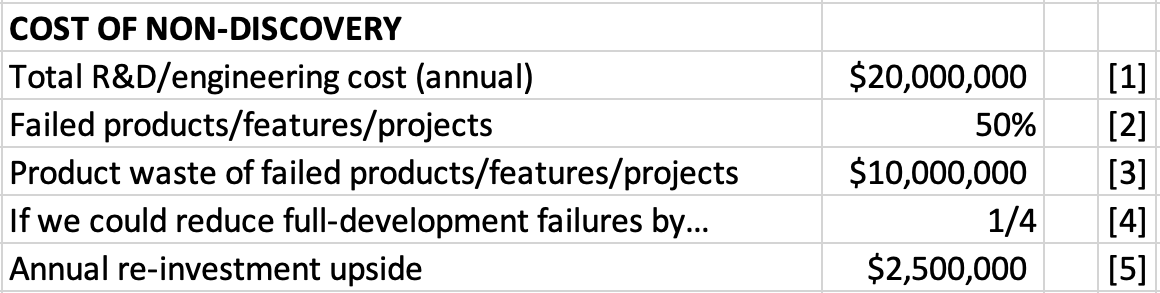

Enough preamble. Here’s an overly simplistic spreadsheet exercise about how good discovery, true end user/buyer validation, and relentless skepticism about our own judgment/track record could save millions and eventually lead to better business results.

COST OF NON-VALIDATION

Let’s walk through a simple (but perhaps helpful) financial model. Our goal is to rough guesstimate how much product waste we create independent of the rest of the “maker” process: development, design, tech writing, QA, cloud hosting…

Taking each line in turn:

[1] Total R&D/Engineering Cost

...is everything we spend on the “maker” side of the house. Developers, designers, product managers, tech writers, test engineers, DevOps, cloud hosting, security consultants, productivity software, laptops, office space, health insurance, payroll taxes, Zoom, Jira, recruiting, training, logo hoodies, sprint review donuts, copies of Managing the Unmanageable. Everything. In the SF/Bay Area, that’s roughly $2M/year for every 8-10 person team.

Important to note that R&D is almost entirely people: salaries plus benefits for developers and designers and tech writers and product managers. Hiring and firing are the main drivers of R&D costs – relatively fixed in the short term, regardless of whether we build hit products or losers. (And ramping development staff up/down/up/down on a project basis is almost always a failed approach.)

It’s rare for product folks to have R&D spending numbers handy. But Engineering usually tracks this, and the Finance team reports it to our CFO and Board every quarter. Thus the quarterly demand for some (mythical) development productivity metric: how do we know that we’re getting our money’s worth?

[2] Failed or Low-Value Products/Features/Projects

Until we unearth the data, no executive team believes that we have a validation or discovery problem. As leadership teams, we’re all guilty of recency bias, roadmap amnesia, failure to measure actual outcomes, magical thinking, and blame-heavy interrogations that discourage admitting failure. We simplify problems, minimize system complexity, and jump to ‘obvious’ solutions. We avoid setting clear success criteria. Most execs tell me that they are so tuned into their business and customers that they are right 90% of the time! Our egos get in the way of dispassionate analysis of our own ideas.

But when we actually sift through recent history, it’s a different story. One client of mine found that half of their product work had zero or negative impact on key business metrics. Half. Other clients of mine found product waste in the 35%-70% range. Berman & Van den Bulte found that 25%+ of A/B tests give us false positives. But at some companies, doing rigorous failure analysis has major political repercussions.

What would I include in product waste?

- Newly launched products that die on arrival from incomplete understanding of real customers’ complex needs

- Features we build for one customer, but justify based on wider (imagined) adoption. “Everyone will need that!” A year later, only two clients are using it, and we need to support that custom code for 5 more years

- Products or expensive features that don’t have clear success criteria. We ship v1.0 on time and declare victory whether we sell 10 units, 10k units or 10M units.

- Replatforming projects abandoned part-way when our optimistic schedules run long (even though we all knew this was likely). Or replatforming projects that were intended both to be perfectly backward compatible (i.e. nothing new, nothing changed, 100% painless upgrade) and also intended to give customers compelling reasons to upgrade (i.e. major new capabilities).

- Products or companies we acquired on the assumption that integrating them would be easy (but two years later, cross-sell and shared capabilities are near zero)

- Shipping features because Gartner says our competitors have them

- Work funded on a project basis, where a minimal v1.0 inevitably needs v1.5 to address known gaps, but the team is already deployed elsewhere. v1.0 quickly sinks into obscurity.

So the first step is to look back a few quarters, list everything we built (above a reasonable size), and do an intellectually honest scan for product waste. What could we have identified in advance as problematic, before we spent $M's building it? Where are we making consistently poor decisions or assumptions? This can be challenging, even ego-bruising, and won’t happen in corporate cultures based on blame or punishment.

We'll use 50% as our product failure starting point. Unless we’re at the rarest of organizations, anything under 35% is probably sugarcoating or self-deception or fear of reprisal.

[3] Annual Cost of Failed Products/Features/Projects

Row 1 * Row 2

[4] If We Could Reduce Full-Development Failures By…

We can’t fix everything at once.

The discovery and development cycle is complex, semi-structured, and includes lots of bets about the future. The world is chaotic. We can’t always be right, and will never reduce product waste to zero. And we want to avoid analysis paralysis. But better discovery before we start full development can show sizable improvement. BTW, that must be paired with leadership-level cultural changes to reward thinking before action, and executive applause when we spend a little time/money to sidestep a major disaster. We have to celebrate learning, not just delivery.

I’ve seen some companies improve this by ⅕ or ¼ or ⅓, while others aggravate the situation by adding more top-down C-level portfolio reviews and committees. So for this exercise, let’s posit that reducing current waste by ¼ is possible. Your mileage may vary.

[5] Annual Re-investment Upside

Row 3 * Row 4 gives us an order-of-magnitude guess for effort that could be spent more thoughtfully. People and time and smart thinking that could be put to better use. And since each item is intended to drive positive outcomes, making better choices lets us boost business results with current budgets.

In other words, smarter discovery lets us put more of our effort into likely winners. A little product time avoids squandering major development energy. More market-based choices and fewer executive overrides.

Sound Byte

If we can describe the financial upside of good discovery, we can help our go-to-market-side partners see why this is important. It's naive to assume that our whole organization connects "learning" or "validation" with "money" or "product success." So let's honestly identify failure patterns, pencil out costs, and start to improve how we make decisions.

Which then prompts a series of questions from CEOs and finance/market-side executives:

Q: If we do better discovery, can we chop R&D?

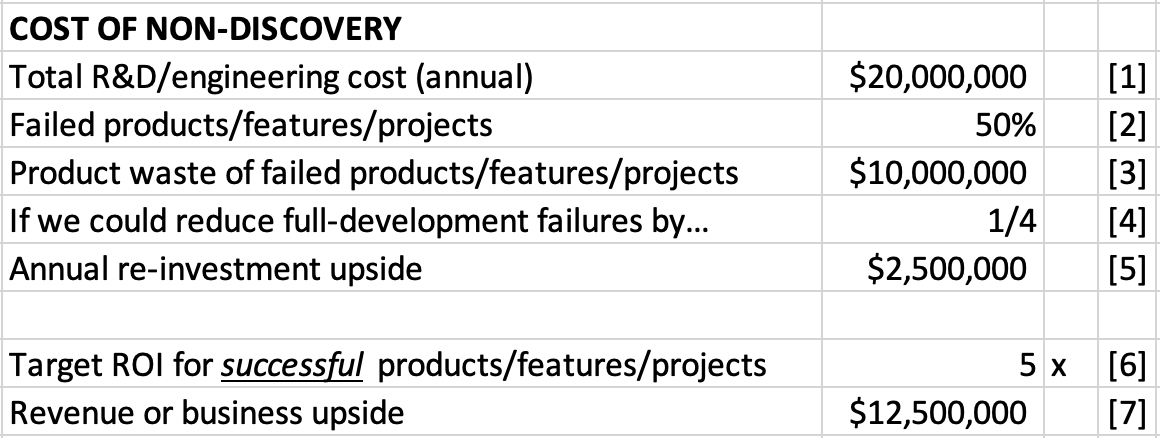

No, sorry, Your company (like all others) has an infinite list of projects, products and initiatives that could move the business ahead. There is no shortage of ideas or suggestions or demands. Every company’s development group is 8x or 15x or 45x over-subscribed. So doing good validation on things at the top of our backlog will let the worthiest candidates move forward. Technology is a critical economic driver for almost every company, so this eventually provides a big business bump.

Flipping this around, we probably have an internal hurdle rate or target ROI for customer-facing development work of 4x-6x. In other words, we should have reasonable expectations that a $50k technical investment could pay back $200k-$300k. Reducing product waste gives us a chance to fund similar-sized work with higher likely returns.

Assigning 5x target return on row [6] suggests that we could boost revenue (or reduce costs) enough to impress our investors [7].

These are directional, not precise. Our finance team may want forecasts to 5 decimal places, but product futures are ± 50% on a good day. With the wind at our backs and optimism in our hearts. So the upside for our hypothetical company is more like $12M ± $8M.

Q: How soon will things improve (and sales go up)?

This is a major change in how companies behave at the executive level, not just some new processes or forms for Product to complete. It calls for different decision-making: specifically (in enterprise software companies) shifting approval of single-customer deal-driven commitments from Sales to Product. The biggest obstacles are organizational:

- C-suite belief that even though we have an approved product plan/roadmap of carefully validated work, we catapult customer-written requirements from our largest deals ahead of planned work. Without serious validation or discovery. Without deep dives to see if they are correct or make sense. Without market data or commitments that 10 other enterprise customers will buy exactly that same widget. Without product/engineering having an equal voice at the executive table. By mid-quarter, our product plan is in shambles even though many of these huge deals won't close.

- Services or customer success teams whose comp plans encourage them to deliver more (and more and more) professional services, even though that slows down deployments and throttles our ability to add new customers. And eventually creates an enormous migration/upgrade blockage. When we put as much energy into onboarding/customizing/configuring/integrating our software as we put into building the core product, we become a profession services firm hiding in a product company.

Changing how executive teams make decisions is at least a year-long journey.

This is also really hard work for the product team. Good discovery is a skill, and many product managers don't (yet) have the experience or tools or mentoring to get it right. Figure that you'll need a couple of quarters to aggressively train/mentor product+design+engineering staff on validation techniques. And another quarter or two to see results. So we should be thinking about a year to see major improvements. (If your company has a three-month attention span before dropping one shiny concept for the next, don't expect real change.)

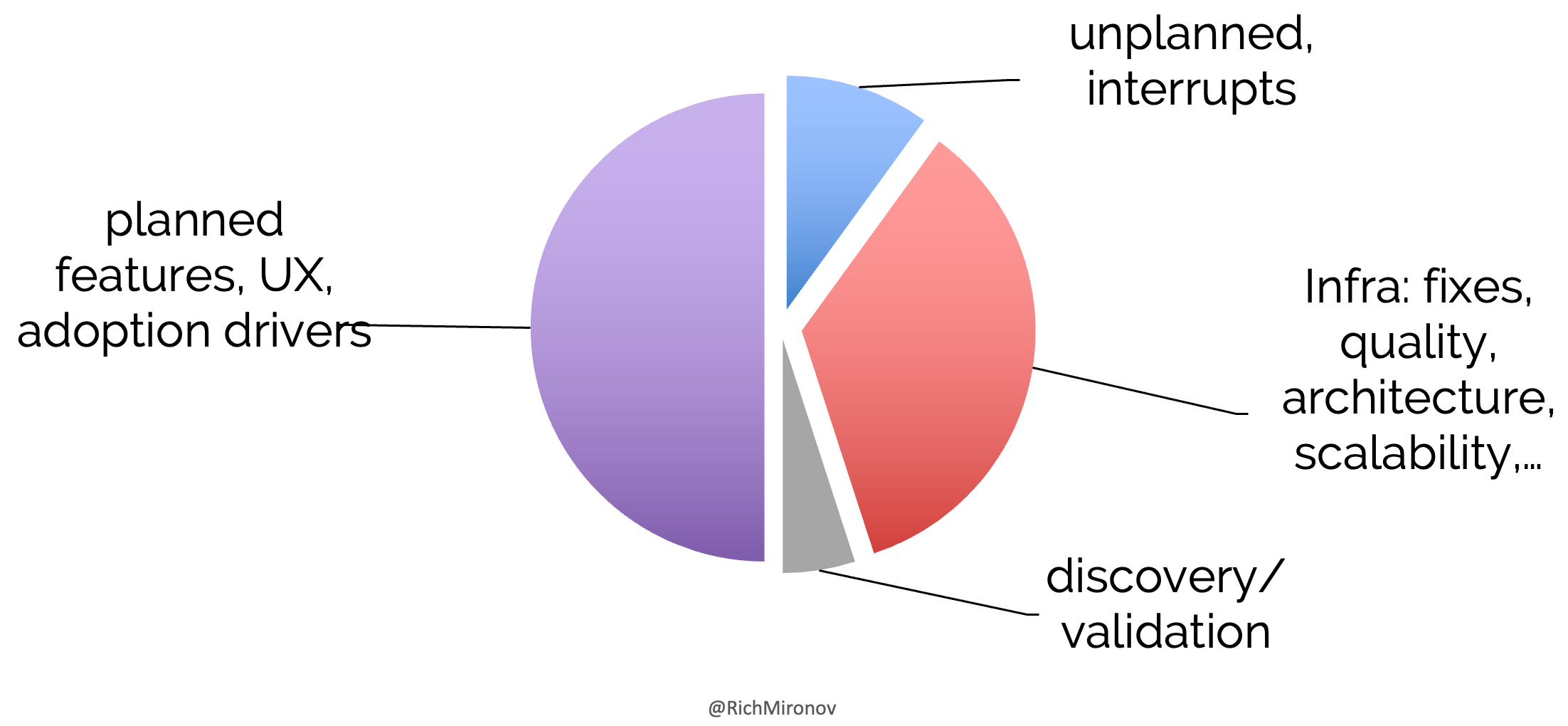

Q: What about ROI for tech debt, design, and architecture?

Architecture and technical debt resist this kind of simple ROI. Most inward-facing engineering work is hard to tie directly to revenue. It's very difficult to attribute an individual customer win to a single feature or capability, and tracking this company-wide is a colossal effort. Proving that a specific security fix actually prevented an intrusion is maddeningly hard. Likewise tough to verify that common design templates actually boost customer satisfaction. Or that better DevOps will directly lead to lower bug counts. But we have to do these to stay in the software business.

Users expect scalability, geographic failover, privacy controls, password change processes, and fully tested products. If they are calling to complain about lost data or misbehaving commands, we're too late.

Technical investments come in a few broad categories, so it's useful to make some strategic allocation decisions before sorting individual tickets. Separating new features and customer initiatives from infrastructure and -ilities lets us compare similar items with similar objectives. It reduces our tendency to apply a simple "current quarter revenue-only ROI" metric to a complex mix of short-term and long-term investments.

I counsel clients to apply hard-nosed market and ROI thinking to the feature half of their R&D spend. And protect the infrastructure half from go-to-market encroachment. ("We'll shift 100% to features, but just for this quarter. Then we promise we'll get back to a sustainable investment model. Prospects and salespeople will be much more reasonable next quarter.") We'll use qualitative, inward-looking methods of prioritizing platform work / bugs / test infrastructure / help systems / common UX / refactoring. Good approach: asking the development team what 2 improvements would make their jobs easiest; asking Customer Support which 5 bugs represent the most (or toughest) calls.

Q: I want our company to be product-led but not change how we set priorities, who makes decisions, or what account teams commit to our largest customers.

Let me know how that turns out.