We’ve laid out three fundamental facts about commercial software: your development team will never be big enough; all of the profits are in the nth copy or nth subscriber; and the software bits we release are not the product. These led to three laws for software businesses (the Law of Ruthless Prioritization; the Law of Build Once, Sell Many; and the Law of Whole Product).

One last market observation is that you can’t outsource your strategy. Not to your customer base, not to your sales force, not to a strategy template, and not entirely to a prioritization algorithm. Product strategy is a prediction about how your future actions will move the market, and therefore needs a range of inputs and scenarios. Plus some strong beliefs about where things are going. So let’s stand up a few of the most popular strategy outsourcing approaches, and then knock each one down.

Can’t Customers Decide For Us?

We need to listen to our customers all the time. Of course. But input is different from fully delegating our strategy to a survey or forum. We must anticipate biases and limitations from every kind of customer input.

Customer Advisory Boards (CABs) bring together the largest, deepest-pocketed, longest-standing customers for extended roadmap presentations and executive feedback. This is good for spotting broad trends among major customers, and great for sales teams that want to reinforce “strategic” relationships. Attendance also boosts customer commitment, since anyone willing to sit through two days of product pitches must be a supporter.

Concerns: These are rarely representative of current or targeted customers: heavy on executives (versus end users) and multi-year revenue. We’re on our best behavior. Scripted presentations and motivational speakers crowd out real collaboration. CABs are important from a sales point of view, but IMO deliver few fresh ideas.

User Forums give our user communities a way to ask questions, offer help, and propose / vote up enhancements. Typically these are tied to support boards or marketing campaigns, and reflect what the most active users are thinking right now. Often, we learn as much from the search patterns of non-voters as from the votes themselves.

Concerns: Forums skew toward more active/more technical users and focus on specific issues or point-in-time requests. They tend to propose solutions (“change this, add that button, build more connectors”) rather than frame problems (“I want to visualize real-time transactional data as well as archived offline info”). Especially if we promise to implement the topmost items, we encourage gaming via social media on narrow issues. Low participation and weak demographics mean we may not know what the vast majority of users think.

More importantly, there’s typically not much news here. Product managers should know what’s on the minds of users/customers, and we get few surprises in these forums. For instance, mobile phone vendors will surely be asked for faster data rates, lighter devices, longer battery life, bigger (and smaller) screens, etc. We have to apply good judgment to see what’s interesting or new.

Even more importantly, these forums are lagging indicators. Kano analysis tells us that we will never differentiate our products by only matching established checklist items (aka baseline features) that the mass audience already gets elsewhere. We need one or two truly interesting items (aka delighters or exciters) in each major release. Yet vote-ups will typically highlight the well-known, obvious, already-popular features that our competitors have been shipping for a year or two. There may be gold hidden farther down the list, but finding the good stuff – and then understanding, spec’ing, sizing, prioritizing and building it – requires strategy and insight and empathy beyond simple vote counting.

Customer showcases/sprint-level demos bring a few customers into product- or scrum-team-level demonstrations ever few weeks. These customers are usually your most technical, most committed, and most eager for early code access. Demos may be highly technical, showing partial user flows and early APIs. Showcases are great for spotting engineering issues, migration problems, and wrong assumptions about how products will actually fit together.

Shortcomings: Showcase customers are unlike all of your other users: they love you intensely, know your tech deeply, and are willing to look at early versions over and over. They may never have to install from scratch again, or make newbie mistakes with your awkward user experience. Letting these 2 or 3 experts define your strategy pulls you away from the broader market and toward ever-more-custom-designed solutions. Great input, but not a strategy, unless you want to move toward contract development.

You’ll also find great insights from win/loss analysis (about how to close more near-term deals), industry analysts (who shape next year’s RFP checklists), and social media (for cause-related and political issues). No single source dominates, though, or can be used without filtering. Strategy starts with some weighting of disparate inputs and arrives at a clear future goal.

Can’t Sales Decide For Us?

If each of the above approaches is partial/incomplete, imagine giving your sales team the keys to the product strategy car. This quarter’s largest deals, regardless of segment or problem or technology, will get their features onto our roadmap. (Some items, of course, conflict with each other or are technically impossible.) Next quarter, we wipe the roadmap clean and start again.

I love Sales organizations, especially since they pay our salaries. But we don’t hire/reward/promote Sales for building long-term product strategies. Instead, they make twice our salaries to close individual deals and nurture specific accounts. Good input for us, not unbiased market truth.

And so on. We need to get a wide variety of inputs from many market sources, recognize each one’s biases, then weigh those against our strategies and technical challenges. No one customer group automatically outvotes the others.

Can’t We Just Sort the Backlog Based on ROI?

This would seem like an obvious approach: assign business value to every epic or major feature; estimate development effort; and build those with the highest value-per-story point. Engineering teams always start here, and project management frameworks all assume that we can actually do this. (For instance, see SAFe’s Weighted Shortest Job First). This might work for internal IT organizations that have large numbers of unrelated small projects intended for internal-only customers, but I have not seen this work (by itself) for market-focused product companies. Here are three of my concerns:

Revenue estimates for new features or products have huge error bars. Most companies don’t know (with any accuracy) what their overall sales will be next quarter, so revenue forecasts for individual product improvements is fraught with uncertainty. (See my tirade on this.) In unguarded moments, product managers will admit that their feature-level revenue SWAGs might easily swing 3x based on a long list of imponderables. We’re required to assign “business value” to each epic or major item, but we know that the second decimal place is worthless. Sometimes the first decimal place…

And initial business value estimates are made long before development actually starts, in order to prioritize work. So timing and implementation details don’t yet exist. I’m always willing to sort business value into t-shirt sizes (S, M, L, Justin Bieber), but neither development effort nor business value numbers are accurate enough to separate the #1 feature from #2, #3 or #4.

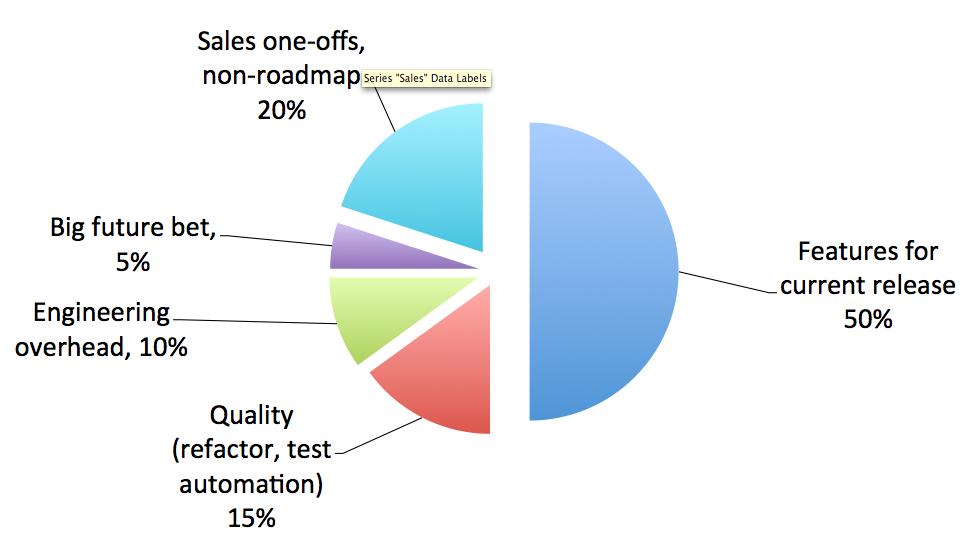

We’re forced to convert unlike things into a single currency, using artificial exchange rates that are hard to justify. This flattens a rich assortment of things (features, long-term R&D, architecture, technical debt reduction) into an easily sorted list, but IMO loses most of the subtlety and all of the interesting trade-offs. What’s the “exchange rate” between a small improvement in user workflow and fixing one more bug? And does that stay constant as our user experience gets much better or quality drops? I’ve argued at length that we need a portfolio strategy to decide how much to invest in planned features vs. test automation vs. one-off customer needs vs. pure research so that we don’t accidentally trade off entire categories of development work.

Great products are curated, with well-chosen collections of features that fit together to solve a specific set of problems. I’ve seen too many products, though, that reflect a build-whatever-has-highest-value model: overstuffed, hard-to-use, semi-random sets of tools that individually scored well on ROI. Des Traynor reminds us that most users spend their time on a very few functions, which is where we should focus design and development effort, and avoid the feature bloat that puts great products/great user experience at risk. I’d rather be building the next Slack than the next MSWord.

Of course, business value estimates are important. And of course, we should rough-sort the big-win-small-cost opportunities from the little-value-massive-waste items. Good estimates and good algorithms help find the top 10% of candidates in the backlog. But then we have to follow with a thorough, multidimensional review aligned to market strategy. Get the right handful of folks in the room and figure it out face-to-face-to-face.

Top-Down Meets Bottom Up

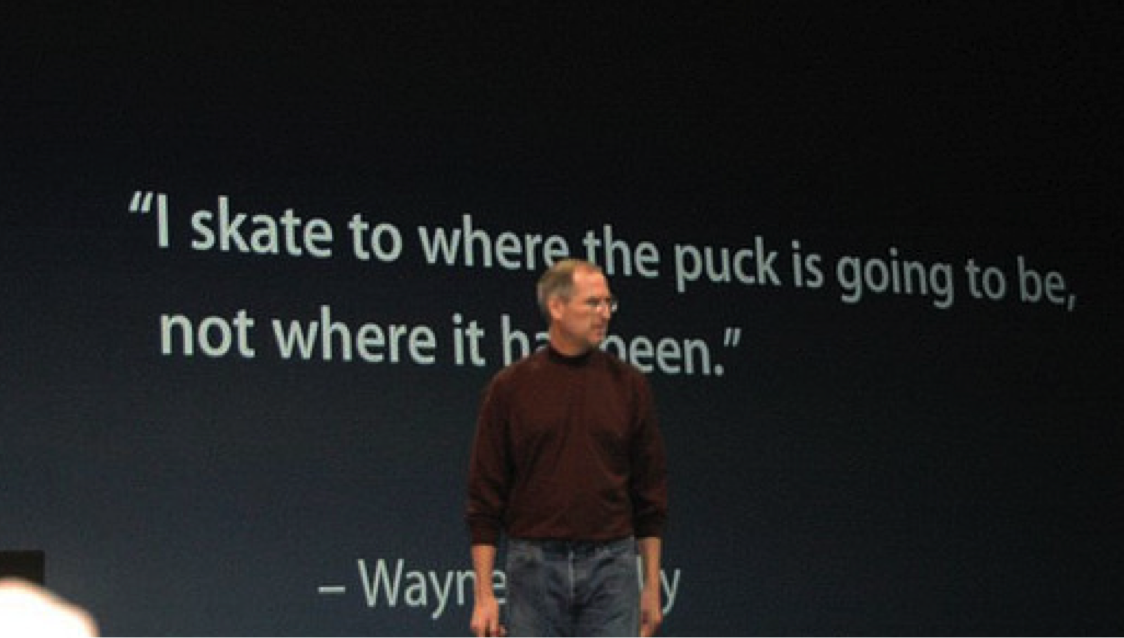

Ivory tower strategy has its own failure modes, including magical thinking. But solid planning has to blend top-down with bottom-up. Goal-setting combined with analytics. Jobs-to-be-done solution focus balanced against attention to emerging customer needs. We need a point of view, an opinion on how markets and users are evolving. (Quoting Walter Gretzky, via Wayne Gretzky and many others, we have to “skate to where the puck is going, not where it has been.”)

We have hard work to do – clear thinking and real market validation – to create a product strategy that’s supported by customer input, technical realities and financial logic. Delivering products/services that the market wants and we want to sell.

So closing out this long post and longer thought, we need a fourth law…

The Law of Strategic Judgment

Voice of the Customer mechanisms, user forums and business value analytics are all important inputs to a product direction and prioritization of the short-term backlog. But none of them deliver definitive answers. As executives and product leaders, we have to weigh many inputs, make hard trade-offs, synthesize a strategy, and own the (uncertain future) results. We have to develop and apply judgment.

By the way, our competitors are gathering similar data from our target audience. Tracking similar trends, talking with the same industry analysts, crunching the same numbers. We can’t all succeed with identical offerings. Strategy includes knowing what we are better at (logistics? distribution? design?) and segmenting our audience for competitive advantage (follow the obvious all-mobile trend or double down on laptop/desktop users?). We are paid to listen, then think, then act strategically.