I often find myself siding with old-school agilists who believe that teams (and their product managers) must continually experiment their way to good processes and collaboration, rather than “best practices” folks who believe there’s a fundamentally right way to do things. I take the position that categorical imperatives are always wrong.

Here’s an instance to work through…

“My development team tells me that my user stories need more detail.”

I hear this from product managers almost every week, often when they are looking for yet another perfect user story template or process flow. Sigh. This seems like a missed opportunity for team-building and collaboration and work reduction rather than another burden on overworked product managers. Especially when those same product managers follow up with “now my development team tells me that my user stories are too prescriptive, too focused on HOW, and don’t give them the room to creatively solve from a good WHAT statement.” Huh? What’s really going on?

A Grossly Unfair Generalization

I find that most developers love to talk in absolutes and generalities. (“Scrum is better than Kanban.” “Slack is God’s gift.” “No, Slack is an appalling drain on our attention.” “Sales people don’t listen.” “Execs only care about short-term revenue.” “We should only hire developers with CS/EE degrees from well-known universities.” “No, the best scrappy developers are all self-taught.”) Developers enjoy the intellectual jousting of technical debate, proving who is “smarter” or faster on their mental feet*. So I’m not surprised when someone on the team combines absolutism with recency bias, converting “this particular user story is skimpy” to a more universal “all of our stories need more detail.”

But as product managers, we need to hear what folks say and then dig aggressively for underlying issues. We listen skeptically when a paying customer tells us how to fix something. We don’t transcribe requests verbatim and hand them over to our development team. Instead, we unpack and question and validate and look for patterns across the user base…

“my development team tells me that my user stories need more detail” deserves the same thoughtful inquiry. All stories? What’s missing? Is this leading to bad software, or just additional conversation? Are user stories the right way to fix what’s wrong? How do we understand this comment if (last week) we heard the opposite? This could be a real team-wide headache or general grumbling or one developer’s assumption that a product manager’s only job is to write perfect user stories.

And notice that I can’t resolve this issue in my own head: it requires talking with my team, extracting real opinions, and collectively dissecting those opinions. Applying good product analysis internally. Collaboratively agreeing on next steps.

Let’s Unpack This as a Team

If this hasn’t already come up naturally in our retrospectives**, I might briefly raise this with the team at the end of tomorrow’s standup: “I’ve heard some concerns that our user stories lack detail or completeness. Quick show of hands for agreement? If we need the whole team to spend 45 minutes discussing approaches and process improvements, who sees that as a good investment of time?”

Maybe it’s just grumbling, and no one really cares. Most of an hour sounds like a lot, even though we waste that every day walking to Philz for designer coffee. But if it’s a real irritant, the team should be willing to make time.

Assuming we’ve agreed on a meeting, let’s also redefine the problem to be more specific, more tractable, less universal: “I’ve heard that my stories are too long and too short. Possible that both are true for different kinds of stories. Let’s look over 8 or 10 different stories, get your quick votes on which are too long or too short, and why. We might notice some patterns about WHICH kinds of stories are in each pile. Or how to improve the story cycle.”

That gives the team a framework for more constructive discussion and shared empathy as we inspect actual examples. We fight recency bias by looking carefully at the data. The last time I ran this tiny exercise, we collectively identified a few different kinds of stories, each needing its own treatment:

[1] Highly technical, not much product management value

It turns out that a large portion of my stories were entirely obvious, implementation-heavy, and a single phrase would have been sufficient. (“allow users to enter phone number with or without hyphens,” “schedule database re-indexing for non-business hours,” “test and support iOS 12.3,” “instrument freeform text box in help menu.”)

I was unintentionally annoying the team with pages of commentary, self-evident restatements of business value, and naïve technical suggestions. My audience (i.e. the development team) wanted just a few things: test boundaries and dependencies. So I could reduce their frustration while doing less work – if we could sort the right stories into this category. Time saved, blood pressure reduced.

[2] Heavy user interaction, designs mocks, workflows

(“We have to capture postal code before we can compute shipping costs, but this new workflow skips that for re-orders.”)

We identified that my user stories were getting in the way: shoehorning nuanced design work into story templates and discarding important context.

We agreed to run an experiment for a few weeks: attaching full design mocks to succinct stories and scheduling a whiteboard walkthrough for our designer, the two developers most involved in that workflow, and myself (to take action items). If that didn’t go well, we’d try something else, because results matter more than formalities.

[3] Validation experiments

The whole team was excited to interact with real users, run lightweight experiments of major improvements, and dig into activity data. Collaborative, hands-on learning refreshed our sense of mission and importance. (No surprise: this is what brings joy and meaning to what we do.) The team was bursting with ideas, frustrated by a lack of energetic discussions and emotional engagement, feeling relegated to HOW when they hungered for WHAT and WHY.

And they had tremendous insights into good experimentation, once everyone was included. (“Here’s another possibility for why prospects are dropping out of our funnel at step 3b.” “We’re already getting response data from email campaigns. Can we use that instead of adding new landing page instrumentation?” “Running the experiment that way will take months to gather statistically valid data. Instead, let’s try…”) Smarter validations, shared context, and more passion for the work. Plus, it helped that we called these ‘experiment design sessions’ instead of ‘meetings’ because everyone hates meetings.

[4] Goldilocks stories

Some of my stories were not too long, not too short, with appropriate detail and useful content. JUST RIGHT. Product managers aren’t entirely egoless, so I felt OK fishing for the occasional “yes, that one was spot-on, we wouldn’t change a thing.” If it ain’t broke, don’t fix it.

Your team would (of course) come up with other issues and categories and reactions. But notice that a single, canonical, obligatory, fully-realized “best practices” user story format will fail us in different directions depending on the problem at hand. And will add dozens of hours to our product management week – creating unneeded content that our teams may ignore, or have to slog through – instead of focusing our limited time on what drives real product outcomes.

So I generally lean on the broad agile principles that start with “valuing individuals and interactions over processes and tools” before accepting procedural assertions. Standups are valuable if we run them well and know why they add value, especially if we’re willing to experiment with alternatives. Estimation is essential to stay in business, but there are lots of techniques beyond Planning Poker. (Here’s one from Ron Lichty that I love.) Scrum is good except when it becomes dogmatic or inflexible or self-important.

I’m deeply skeptical of best practices and consultant/trainer-provided frameworks and one-starting-point-fits-all. Building great software is much more like writing a best-selling novel (or a hit song) than digging a ditch, so we have to engage our whole teams’ full talents to create brilliance.

Sound Byte

We bring our critical eye and analytic product temperament to customer-facing demands. What users say they want is not always what they want, or what’s good for them. We should bring the same perspective to team-level issues: what’s really going on? How can we collaboratively engage the team? Can we try inexpensive experiments rather than argue about who’s right?

* From my limited sampling, I see less of this dynamic in more diverse teams, especially where women and other under-represented folks feel they belong, are respected, and actively participate in conversations. As product leaders, we can carefully moderate discussions so that every important observation is heard. See more excellent thoughts about inclusion at Better Allies.

** Retrospectives may be the most important agile ritual, and they depend on trust. Every week (or two), the whole team should share what’s working – and what’s not working – with the generous assumption that everyone is doing their best. “What can we try next week?” Organizations and processes are the usual culprits. Here’s the classic reference on retrospectives.

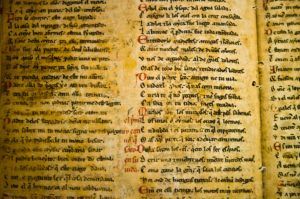

Photo credits: PostIt by AbsolutVision, Latin book by Mark Rasmuson, workflow from Campaign Creators.